Unlocking Artificial AI: Exploring Neural Connections

2023/4/15 18:24:14

Views:

Manufactured insights (AI) has advanced significantly over the past few decades, empowering machines to perform assignments that were once considered select to human cognition. As we dive more profound into the complexities of AI, it gets to be apparent that understanding the fundamental neural associations can light up the way toward indeed more advanced applications. This article investigates the crossing points of fake insights and neuroscience, shedding light on the potential future of AI innovation, counting its suggestions in different areas such as healthcare.

Op-Amps, Part 1: OpAmp LM324N Comparator

Table of Contents

- Understanding Artificial Intelligence

- The Role of Neurons in AI

- AI in Healthcare

- Advances in AI Technology

- The Future of AI: Best Practices and Considerations

- Conclusion

- Commonly Asked Questions

Understanding Artificial Intelligence

What is Artificial Intelligence?

At its center, manufactured insights is the recreation of human insights forms by machines, especially computer frameworks. These forms incorporate learning, thinking, and self-correction. As AI frameworks ended up more complex, they mirror the cognitive capacities of the human brain, counting problem-solving and understanding characteristic dialect. The term "open artificial intelligence" alludes to the development supporting for open AI advances, advancing straightforwardness and collaboration in AI advancement.

The Connection to Neural Networks

Neural systems, motivated by the organic neural systems within the human brain, shape the spine of numerous AI applications nowadays. A neural organize comprises of interconnected hubs, or neurons, that work together to handle data. This engineering mirrors the way neuron brain cells communicate and work together, permitting machines to memorize from endless sums of information.

Intersection of Artificial Intelligence and Neuroscience

The Role of Neurons in AI

Neuronal Connections

Understanding how manufactured neural systems work requires a fundamental get a handle on of how organic neuronal associations work. Neurons communicate through neural connections, where electrical driving forces are transmitted, permitting for quick data trade. This concept is central to the plan of fake neural systems, where associations (or weights) between hubs are balanced amid preparing to optimize execution.

Hippocampal Insights

The hippocampus, a significant portion of the human brain related with memory and learning, offers experiences into how recollections are shaped and recovered. Analysts examining the hippocampal locale have famous parallels between its capacities and those of certain AI frameworks, especially in memory recovery and design acknowledgment. By imitating these forms, AI frameworks can upgrade their learning capabilities and make strides their execution in different applications.

AI in Healthcare

Transforming the Medical Landscape

AI and healthcare is an region of noteworthy intrigued, as AI innovations offer inventive arrangements for conclusion, treatment arranging, and understanding administration. Machine learning calculations can analyze restorative information, foresee quiet results, and indeed help in surgeries. The integration of AI in healthcare not as it were moves forward effectiveness but moreover improves the exactness of restorative intercessions.

The Role of Trigeminal Neurons

Investigate into particular sorts of neurons, such as trigeminal neurons, has suggestions for understanding torment and tactile recognition. By leveraging AI to analyze information related to these neurons, analysts can create modern medications for constant torment and other tangible disarranges. This combination of neuroscience and AI has the potential to revolutionize persistent care, making it more personalized and compelling.

Applications of AI in Healthcare

Advances in AI Technology

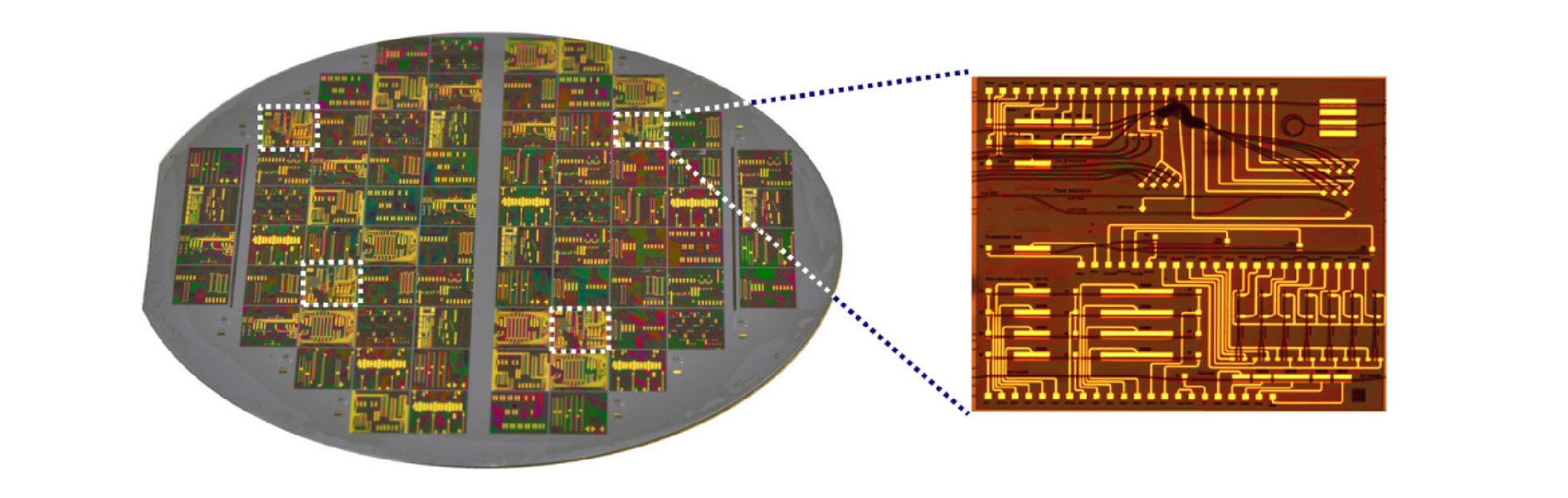

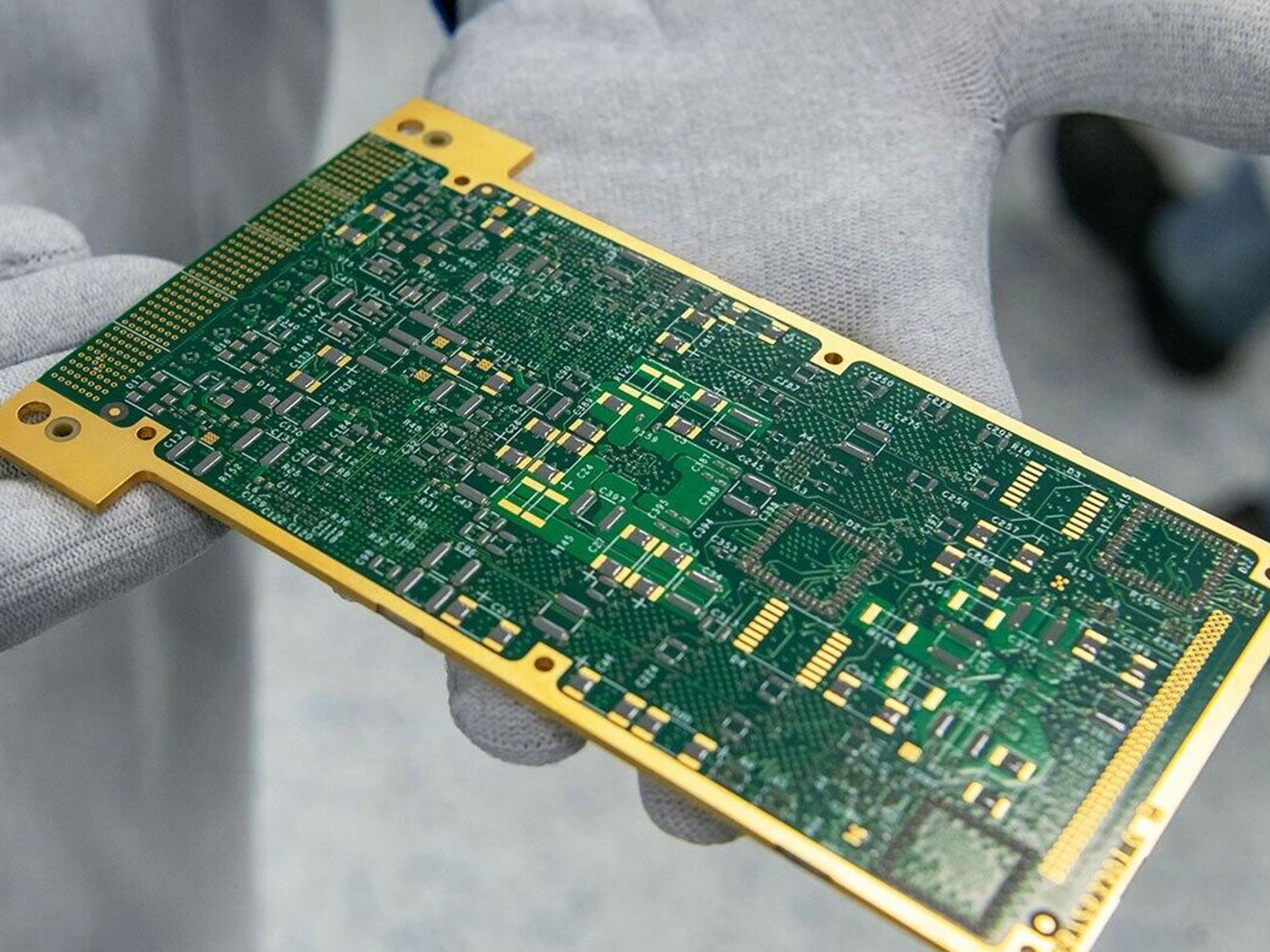

Photonic Integrated Chips

Later headways in AI innovation have driven to the advancement of photonic coordinates chips. These chips utilize light instead of electrical signals to perform computations, advertising noteworthy speed and vitality productivity advancements over conventional electronic chips. The utilize of photonic coordinates chips in AI frameworks permits for more complex computations to be executed quickly, opening up modern conceivable outcomes for real-time information handling.

Optoelectronic Chips

Additionally, optoelectronic chips, which combine optical and electronic functionalities, are picking up footing in AI applications. These chips can prepare data at the speed of light, empowering ultra-fast information exchange and handling capabilities. As AI frameworks proceed to advance, the integration of optoelectronic advances seem lead to breakthroughs in execution and productivity.

Recent Advances in AI Technology

The Future of AI: Best Practices and Considerations

Best Artificial Intelligence Practices

As we see to long haul of AI, it is significant to set up best hones that guarantee the capable and moral utilize of these innovations. This incorporates straightforwardness in calculations, responsibility in decision-making, and reasonableness in information utilization. Guaranteeing that AI frameworks work inside these systems will offer assistance construct believe among clients and society at huge.

The Road Ahead

As we see to long haul of AI, it is significant to set up best hones that guarantee the capable and moral utilize of these innovations. This incorporates straightforwardness in calculations, responsibility in decision-making, and reasonableness in information utilization. Guaranteeing that AI frameworks work inside these systems will offer assistance construct believe among clients and society at huge.

Best Practices for Artificial Intelligence

Conclusion

Unlocking artificial AI requires a comprehensive understanding of the intricate neural connections that drive intelligent behavior. As we proceed to investigate these crossing points, the long run looks shinning for AI innovation and its applications over different spaces. By grasping the standards of openness and collaboration, able to clear the way for a unused period of manufactured insights that's not as it were effective but moreover moral and comprehensive. The travel of revelation in this field is fair starting, and its affect on society will be significant.

Commonly Asked Questions

What is Manufactured Insights (AI)?

AI is the recreation of human cognitive forms by computer frameworks.What are the most applications of AI?

Applications incorporate healthcare, fund, independent driving, and client benefit.What is the contrast between AI and machine learning?

AI could be a broader field; machine learning may be a subset centered on data-driven learning.What are future trends in AI?

Trends include automation, personalized services, ethical considerations, and integration with quantum computing.How can AI effectiveness be evaluated?

Effectiveness can be measured by accuracy, efficiency, user satisfaction, and long-term impact.Related Information

-

-

Phone

+86 135 3401 3447 -

Whatsapp