Rock solid! Pu Technology's large model is perfectly compatible with Moore's Thread GPU

2024/5/30 10:03:33

Views:

Moore Threads and Diptech, a leading data intelligence provider in China, jointly released an exciting update: their Qianka Intelligent Computing Cluster "Kua'e" and Diptech's enterprise large model Deepexi v1.0 have successfully completed the adaptation of training and reasoning, and obtained official certification of product compatibility. The issuance date of this certification certificate is September 28, 2023, indicating that the relevant adaptation tasks have been completed earlier and have not been announced to the outside world until now.

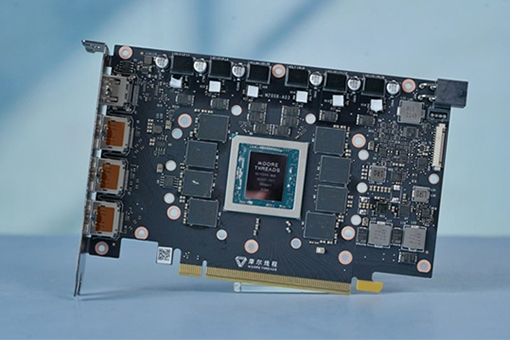

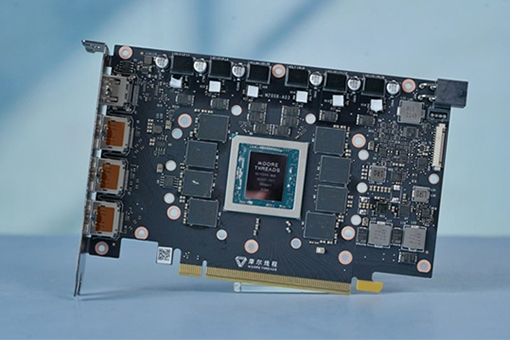

Moore Threads' Kua'e Qianka Intelligent Computing Cluster is not only the industry's first cluster to successfully implement the full-process operation of domestic large models, but also based on the MTT S4000 GPU, it provides a full-stack solution including model coverage, reasoning acceleration and CUDA compatibility. In addition, the Kua'e Qianka Intelligent Computing Cluster has a number of core technologies such as breakpoint continuation training, distributed training and cluster reliability.

Since its establishment in 2018, Beijing Diptech Technology Co., Ltd. has been focusing on providing data intelligence infrastructure, large model products and innovative services for enterprises. Its Deepexi enterprise large model has demonstrated excellent performance in multiple fields, including semantic analysis, visual recognition, speech processing and cross-modal interaction. The company also provides a complete set of model tool chains to help enterprises efficiently perform tasks such as data preparation, model training, adjustment, deployment and reasoning.

Dipu Technology has not only carried out extensive cooperation with companies such as China Haicheng, China Nuclear Equipment Institute and Belle Fashion in the domestic market, but has also become a leading company in promoting the implementation of industrial large models. Moore Thread has cooperated with Wuwen Xinqiong, not only successfully adapted Wuqiong Infini-AI large model development and service platform, but also completed the training and testing of LLama2 70 billion parameters and MT-infini-3B 3 billion parameter large models.

Hanhou Group also relied on Moore Thread's Kua'e Qianka Intelligent Computing Cluster to successfully complete the distributed training of large models with 7B, 34B and 70B parameters, showing excellent efficiency, accuracy and stability. After this series of successes, Dipu Technology used Moore Thread's Kua'e Qianka Intelligent Computing Cluster to complete the pre-training test of the LLaMA2 70 billion parameter large language model, which took 77 hours and achieved 100% stability, achieving the expected training efficiency and compatibility.

Moore Threads' Kua'e Qianka Intelligent Computing Cluster is not only the industry's first cluster to successfully implement the full-process operation of domestic large models, but also based on the MTT S4000 GPU, it provides a full-stack solution including model coverage, reasoning acceleration and CUDA compatibility. In addition, the Kua'e Qianka Intelligent Computing Cluster has a number of core technologies such as breakpoint continuation training, distributed training and cluster reliability.

Since its establishment in 2018, Beijing Diptech Technology Co., Ltd. has been focusing on providing data intelligence infrastructure, large model products and innovative services for enterprises. Its Deepexi enterprise large model has demonstrated excellent performance in multiple fields, including semantic analysis, visual recognition, speech processing and cross-modal interaction. The company also provides a complete set of model tool chains to help enterprises efficiently perform tasks such as data preparation, model training, adjustment, deployment and reasoning.

Dipu Technology has not only carried out extensive cooperation with companies such as China Haicheng, China Nuclear Equipment Institute and Belle Fashion in the domestic market, but has also become a leading company in promoting the implementation of industrial large models. Moore Thread has cooperated with Wuwen Xinqiong, not only successfully adapted Wuqiong Infini-AI large model development and service platform, but also completed the training and testing of LLama2 70 billion parameters and MT-infini-3B 3 billion parameter large models.

Hanhou Group also relied on Moore Thread's Kua'e Qianka Intelligent Computing Cluster to successfully complete the distributed training of large models with 7B, 34B and 70B parameters, showing excellent efficiency, accuracy and stability. After this series of successes, Dipu Technology used Moore Thread's Kua'e Qianka Intelligent Computing Cluster to complete the pre-training test of the LLaMA2 70 billion parameter large language model, which took 77 hours and achieved 100% stability, achieving the expected training efficiency and compatibility.

Related Information

-

-

Phone

+86 135 3401 3447 -

Whatsapp