The Role of Autoencoders in Machine Learning

2024/8/6 11:05:08

Views:

Content Summary:

Content Description: Machine learning is a scientific field that studies how to simulate or realize human learning with computers. It is one of the maximum superior and sensible functions of synthetic intelligence studies. Since the 1980s, system gaining knowledge of has been a key generation for accomplishing synthetic intelligence and has obtained considerable attention. Especially in current decades, this discipline has advanced unexpectedly and has come to be an vital studies subject matter in synthetic intelligence. Machine gaining knowledge of is extensively utilized in know-how structures and performs an vital position in herbal language processing, reasoning, system vision, and sample recognition. Whether a system has learning ability has become an important criterion for measuring its intelligence level.

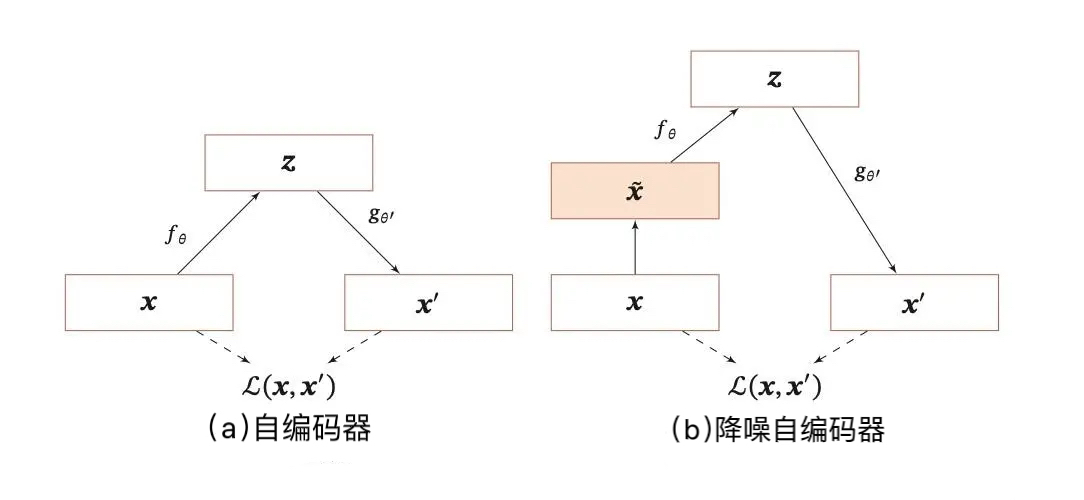

An autoencoder (AE) is an artificial neural network (ANN) used for semi-supervised and unsupervised learning. An autoencoder mainly includes an encoder part. Depending on the structure type, an autoencoder can be a feedforward structure or a recurrent structure of a neural network. Autoencoders are mainly used for representation learning and are widely used in dimensionality reduction and anomaly detection. Especially autoencoders containing convolutional layers have shown significant effects in computer vision fields, such as image denoising and neural style transfer.

Early research on autoencoders aimed to solve the "encoder problem" in representation learning, which is the challenge of dimensionality reduction based on neural networks. In 1985, David H. Ackley, Geoffrey E. Hinton, and Terrence J. Sejnowski first tried the autoencoder algorithm on Boltzmann machines and explored its potential in representation learning. In 1986, the backpropagation algorithm (BP) was formally proposed, and the autoencoder, as a form of BP, known as "self-supervised backpropagation," was further studied. In 1987, Jeffrey L. Elman and David Zipser first applied autoencoders to representation learning of speech data. In the same year, Yann LeCun proposed a formal autoencoder structure and built a network for data denoising using a multi-layer perceptron (MLP). At the same time, Bourlard and Kamp also used MLP autoencoders for dimensionality reduction research, attracting widespread attention. In 1994, Hinton and Richard S. Zemel proposed the "Minimum Description Length principle (MDL)" and constructed the first generative model based on autoencoders.

The goal of a sparse autoencoder is to learn sparse representations of input samples, which is especially important in high-dimensional representations using the Overcomplete AutoEncoder method. Representative figures include Professor Andrew Ng and Professor Yoshua Bengio. Their method is to add a regularization term that controls sparsity to the original loss function, achieving sparse representation through the optimization process. The core idea of the Denoising AutoEncoder is to enhance the robustness of the encoder and avoid overfitting. A common method is to add random noise to the input data, such as randomly setting part of the input to zero or labeling it. These ideas were later widely used in models such as BERT. Another method is to combine regularization ideas, such as adding the Jacobian norm of the encoder to the objective function, making the learned feature representations more differentiated.

Famous researcher Jurgen Schmidhuber proposed convolutional network-based autoencoders and later LSTM autoencoders. Max Welling proposed the Variational AutoEncoder (VAE) method based on variational ideas, which was a milestone research result. Subsequent researchers expanded on this to develop variants such as info-VAE, beta-VAE, and factorVAE. Recently, some researchers have combined adversarial modeling ideas to propose Adversarial AutoEncoders, achieving good results. Some of these methods can be used together, for example, stacking different types of autoencoders.

Related Information

-

-

Phone

+86 135 3401 3447 -

Whatsapp